POSTS

Data Migration with AWS DMS

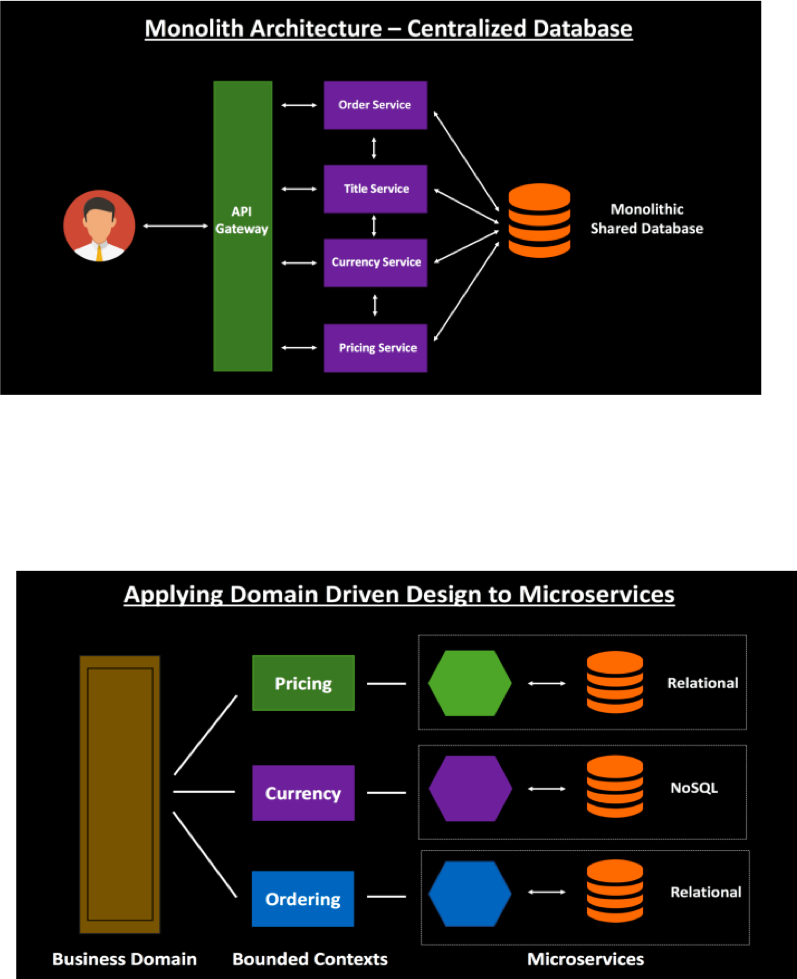

- 3 minutes read - 566 wordsI published an architecture in the two-part series which can be perfect for Digital Transformations. And to become fast paced we decompose big monoliths to individual smaller microservices which bring in the need for moving the data from the monolith world to the microservices world. Below are couple of use cases i have to deal with in my case.

Use Case 1 - Breaking a monolith to microservice

This means that we need data migration from monolith Database to microservice individual data bases.

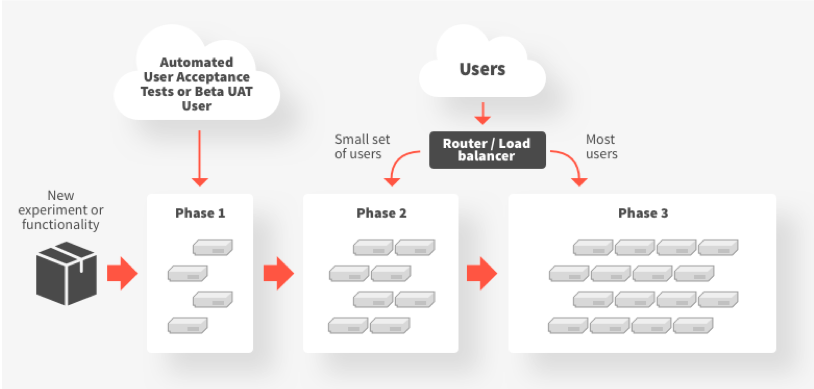

Use Case 2 - The Pilot and Canary Release

Bring in the challenge of maintaining existing monolith DB and new Microservice DB with the continuous replication as the customers move.

Use Case 3 - Large volume Data Extraction in Delimited file

For Other microservices which are broken up from Monolith or part of the large ecosystem. The domain driven design/ bounded context drives the need of the attributes sharing between the microservices.

For the 3rd party systems such as data or SAS providers.

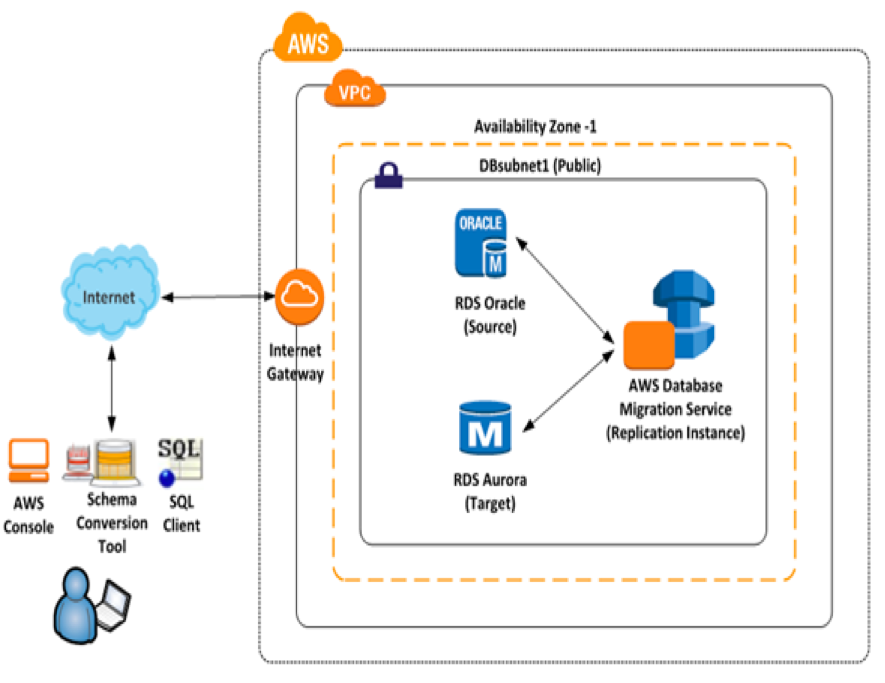

AWS DMS

To meet the above use case and being in AWS cloud i chose AWS DMS.

So why AWS DMS?

AWS Database Migration Service helps you migrate databases to AWS quickly and securely. The source database remains fully operational during the migration, minimizing downtime to applications that rely on the database. The AWS Database Migration Service can migrate your data to and from most widely used commercial and open-source databases.

- Simple to use

- Minimal downtime

- Supports widely used databases

- Low cost

- Fast and easy to set-up

- Reliable

At a high level, when using AWS DMS you do the following:

- Create a replication server.

- Create source and target endpoints that have connection information about your data stores.

- Create one or more migration tasks to migrate data between the source and target data stores.

A task can consist of three major phases:

- The full load of existing data

- The application of cached changes

- Ongoing replication

Type of Loads available:

Full Load Migrate existing data - perform a one-time migration from the source endpoint to the target endpoint.

Incremental Load (CDC) Replicate data changes only - don’t perform a one-time migration, but continue to replicate data changes from the source to the target.

Full Load + Incremental Migrate existing data and replicate ongoing changes - perform a one-time migration from the source to the target, and then continue replicating data changes from the source to the target.

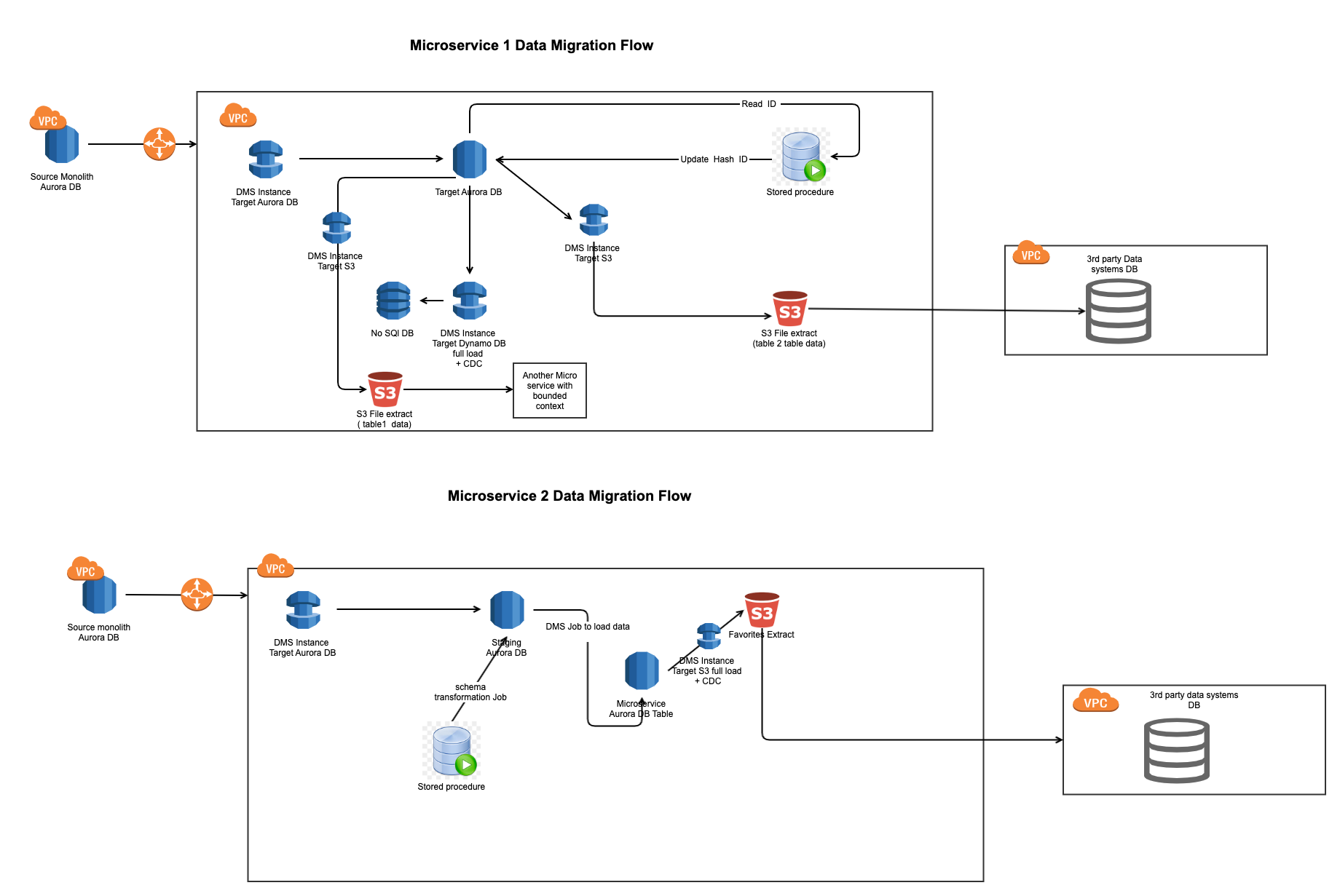

Solution I built with AWS DMS

- Aurora DB to Aurora DB homogeneous migration from monolith to microservice.

- Aurora DB to No SQL Dynamo DB for microservice broken up from Monolith

- Aurora DB to S3 for Third party consumers to run their migration from CSV files.

- Aurora DB to Aurora DB and Aurora DB to Dynamo DB CDC for continuous replication during Pilot and Canary Phase

Caveats

- You need be aware of the Data Type availables before you load the content otherwise the result will not be desirable.

- Minimalist approach (doesn’t create secondary indexes, nonprimary key constraints, or data defaults) , so you need to run your schema changes on the loaded data.

- CDC lag in high volume.

- AWS Services migration require them in same account or subaccount. Especically for S3 push , this could be a chanllange. S3 replicatin can be solution for this.

Connect with Me

If you are interested for a conversation or a chat. Please reach me on my linkedin.