POSTS

Cloud Native Serverless Log Analytics

- 3 minutes read - 626 wordsIntelligence around logged information from your application could be critical to the success of the product but also for business ROI. It’s also part of the operational excellence pillar in well-architected-framework-lens. It assists with factors like business KPIs or customer insights which are important to know how to keep your applications secure, healthy and reliable.

Logs analytics include application logs, audit logs, platform logs, and user logs etc. But challenges arise for log management at scale with

- Storage and Archiving

- Ingestion

- Reporting

- Searching

- Automation

Cloud-native serverless log aggregation and analytics solutions alleviate some of these issues. Cloud-native solution not only offers vast storage with a lifecycle rule for archiving but also managed solutions for reporting and searching with the hooks to commonly used platforms like ELK or Splunk. IAC tools like Terraform are the answer to bring automation to this architecture.

In this blog, I covered the solution used with AWS and GCP public clouds. I have also automated the solutions with Terraform code for which I have provided my Github code.

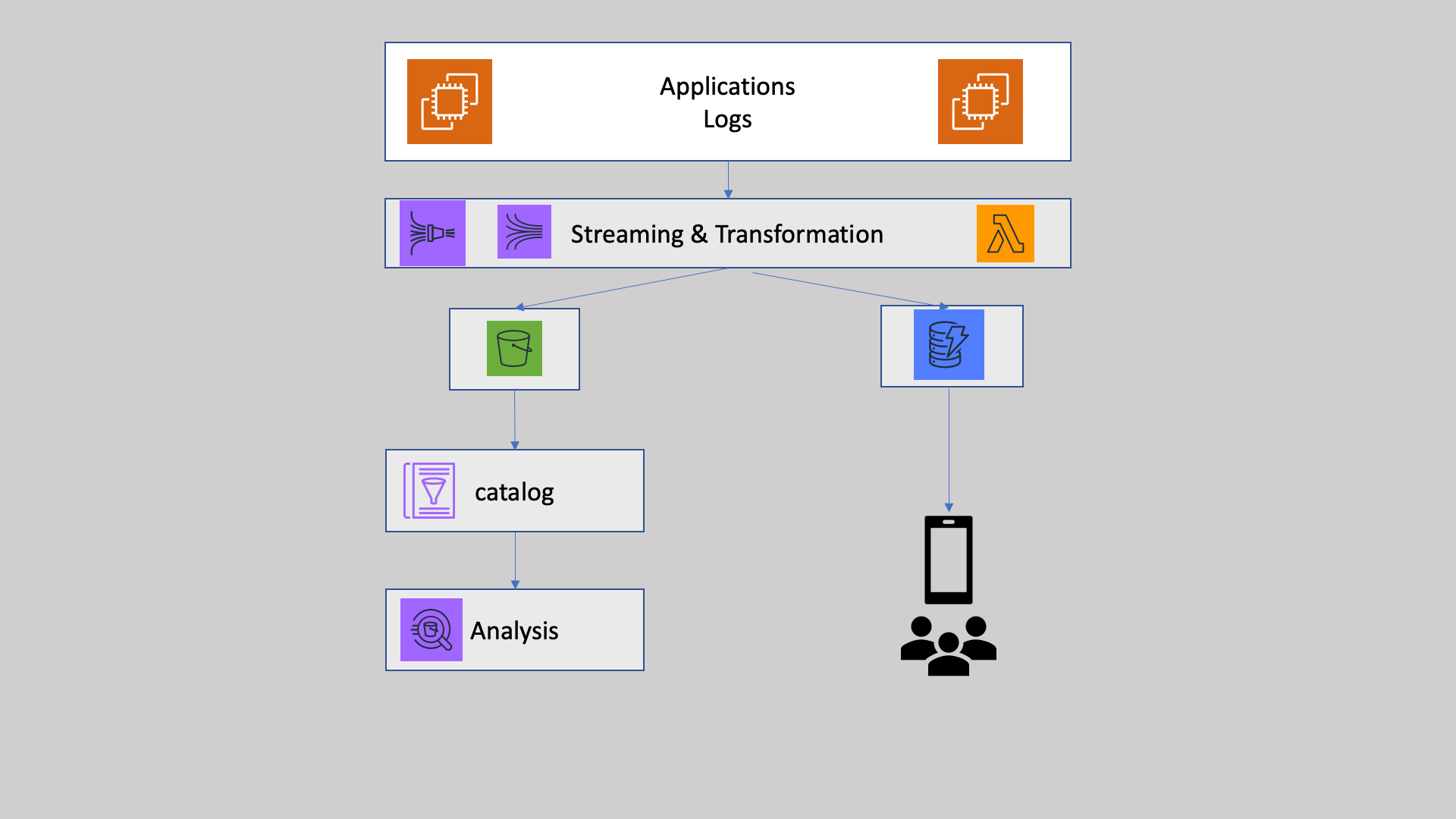

AWS Based Solution

I have used the Kinesis streams to push the logs from the application tier given the volume of logs to be handled and the streaming nature of it. You would like to act on it on a near real-time basis. I have used the plain ec2 with kinesis agent in the user-data script. AWS SDK can be used in Lambda or EKS based applications to push data to kinesis streams.

sudo yum install aws-kinesis-agent -y

........

sudo cat <<EOF > ~/agent.json

{

"cloudwatch.emitMetrics": true,

"flows": [

{

"filePattern": "/var/log/cadabra/*log",

"deliveryStream":"PurchaseLogs"

}

]

}

EOF

Once the logs start streaming, they will go through transformation with kinesis firehose and lambda to a certain JSON format as required. S3 is used for storage and archiving and to feed data to glue for cataloging for analytics. Athena as a serverless query engine helps with analyzing on the fly or with saved queries. You can export the reports for offline consumption or visualization.

I have pushed the log stream to dynamo DB as well to be consumed by any client-facing applications on mobile or web.

All of this code is avilable as terraform IAC at below GitHub repo. https://github.com/acermile/log-analytics-aws-terraform.git

GCP Based Solution

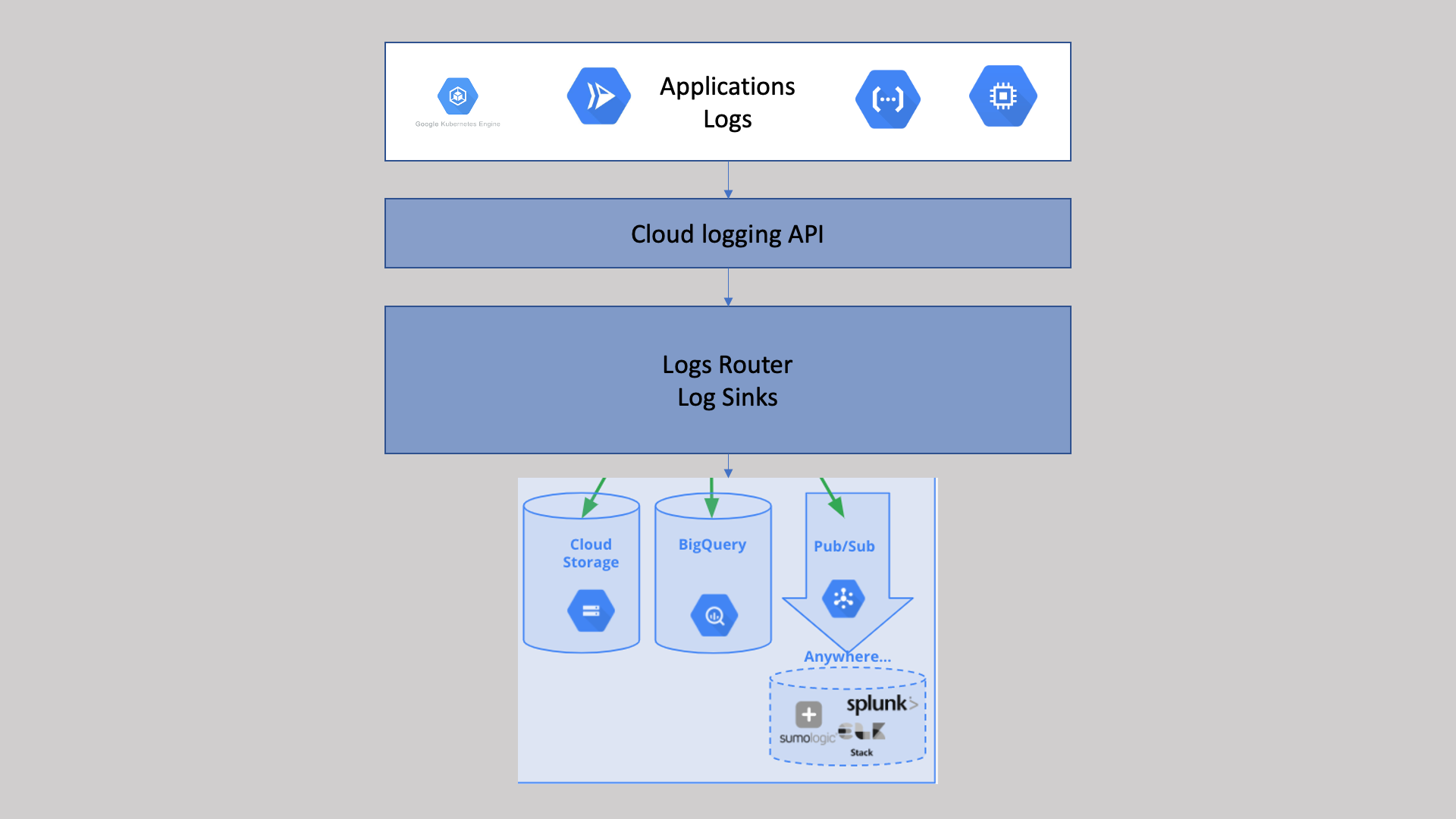

For GCP, I have relied heavily on the cloud logging architecture. All logs are sent to the Cloud Logging API where they pass through the Logs Router. The Logs Router checks each log entry against existing rules to determine which log entries to ingest (store), which log entries to include in exports, and which log entries to discard. For ingestion to cloud logging from VM instance I have installed the cloud logging agent which is built on google-fluentd and that’s the same library that runs in GKE and cloud run.

sudo apt-get install -y google-fluentd

sudo apt-get install -y google-fluentd-catch-all-config

sudo service google-fluentd start

sudo tee /etc/google-fluentd/config.d/purchase-log.conf <<EOF

<source>

@type tail

# Format 'none' indicates the log is unstructured (text).

format none

# The path of the log file.

path /var/log/cadabra/*.log

# The path of the position file that records where in the log file

# we have processed already. This is useful when the agent

# restarts.

pos_file /var/lib/google-fluentd/pos/test-unstructured-log.pos

read_from_head true

# The log tag for this log input.

tag purchase-log

</source>

EOF

Exporting sends the filtered data to a destination in

- Cloud Storage (Archiving and Storage),

- BigQuery(Serverless query-based analysis),

- Pub/Sub (for streaming to log aggregation tools like ELK or Splunk for centralized logging).

All of this code is avilable as terraform IAC at below GitHub repo. https://github.com/acermile/log-analytics-gcp-terraform.git

I have left the jenkinsfile in the codebase to extend this to the multibranch pipepline.

Connect with Me

If you are interested for a conversation or a chat. Please reach me on my linkedin.